PROJECT PORTFOLIO

MonetGAN. Painting with Generative Adversarial Networks: Generating Monet-Style Images Using Novel Techniques

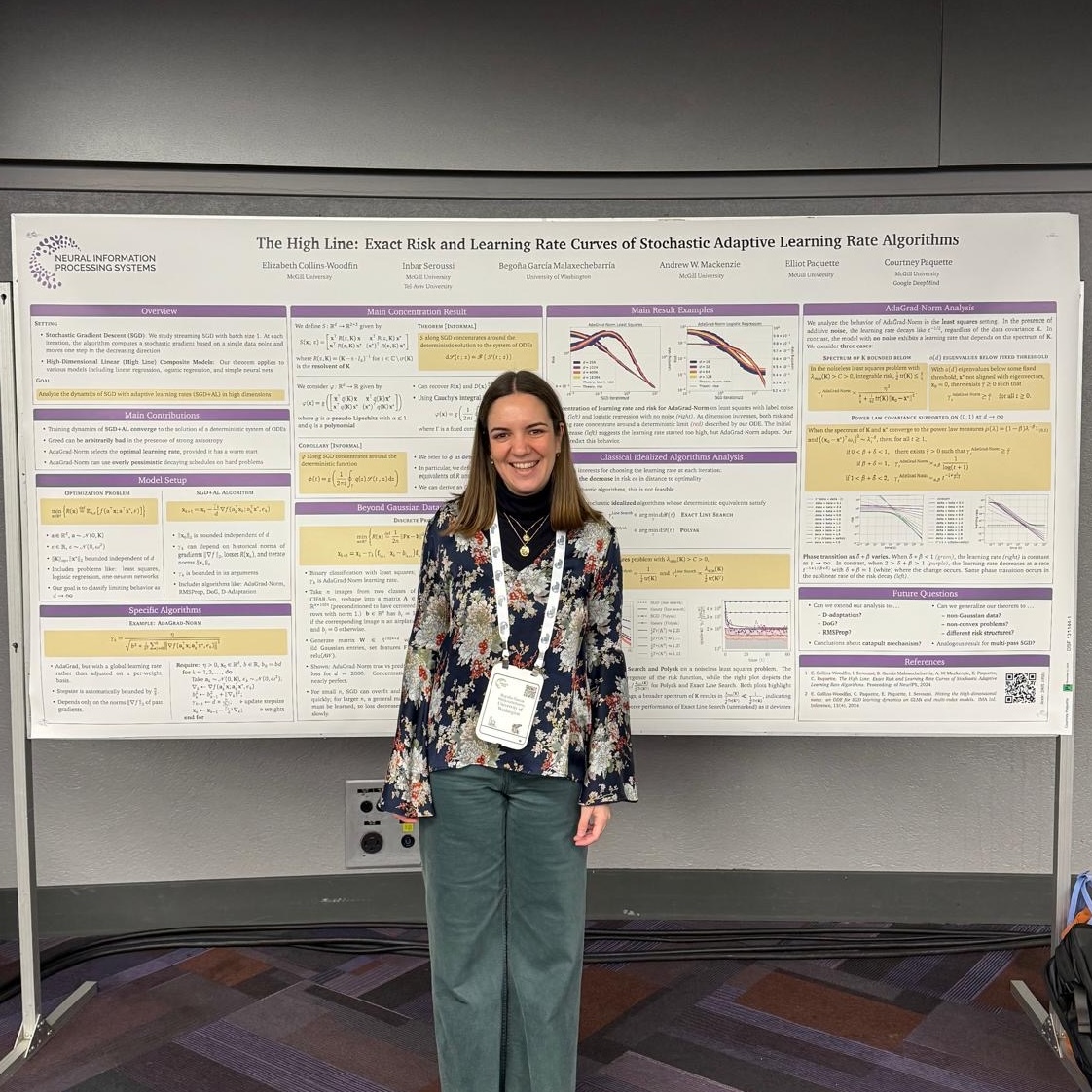

Garrett Devereux, Deekshita S. Doli, Begoña García Malaxechebarría

This project was developed as part of the graduate deep learning class at

University of Washington, instructed by Ranjay Krishna and Aditya Kusupati.

Generative Adversarial Networks (GANs) have emerged

as a powerful and versatile tool for generative modeling.

In recent years, they have gained significant popularity in

various research domains, particularly in image generation,

enabling researchers to address challenging problems

by generating realistic samples from complex data distributions.

In the art industry, where tasks often require significant

investments of time, labor, and creativity, there is a

growing need for more efficient approaches that can streamline

subsequent project phases. To this end, we present

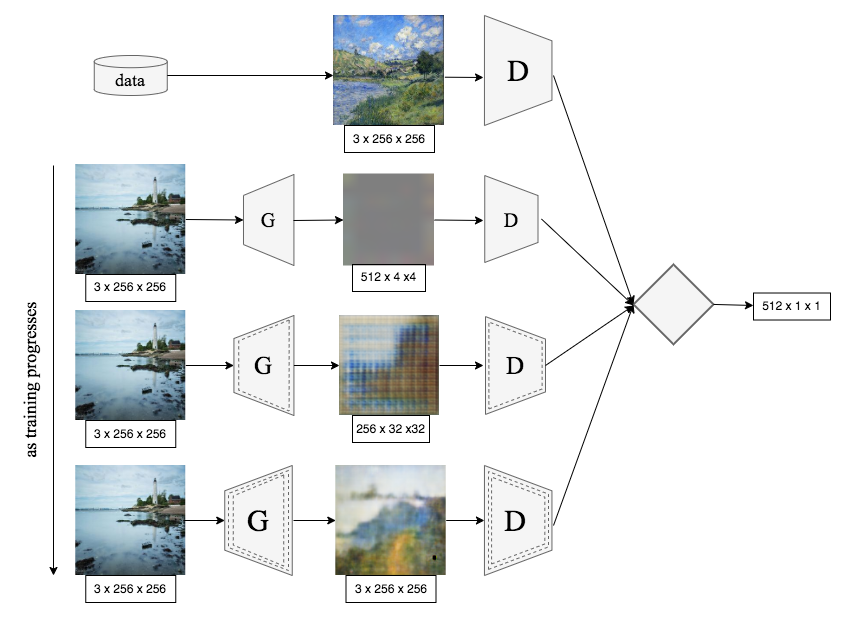

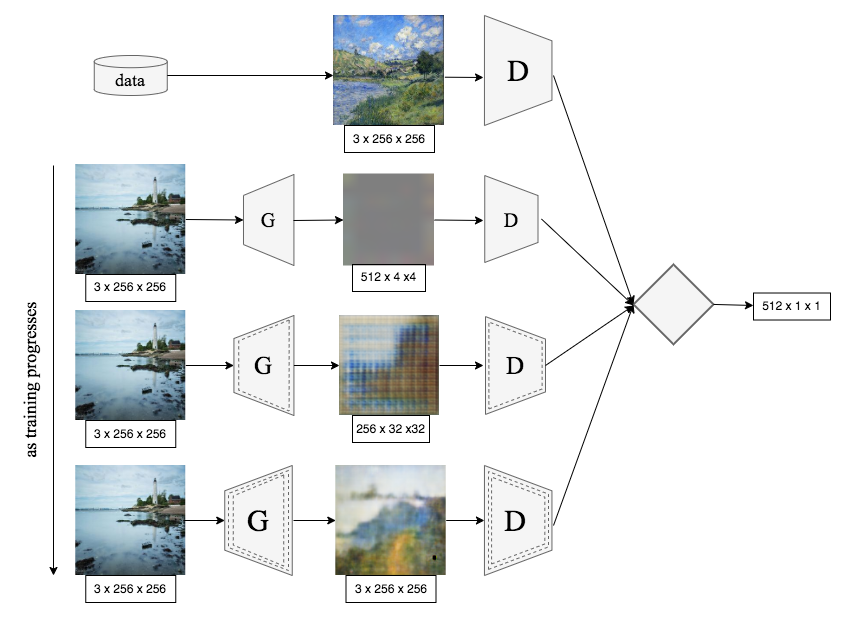

MonetGAN, a pioneering framework based on the Least

Squares Deep Convolutional CycleGAN, specifically tailored to

generate images in the distinctive style of 19th-century renowned

painter Claude Monet. After achieving

non-trivial results with our baseline model, we explore the

integration of advanced techniques and architectures such

as ResNet Generators, a Progressive Growth Mechanism,

Differential Augmentation, and Dual-Objective Discriminators.

Through our research, we find that we can achieve

superior results with small changes to gradually build up

a successful model, rather than adding too many varying

complexities all at once. These outcomes underscore the intricacies

involved in the training of GANs, while also opening up promising

avenues for further advancements at the

intersection of art and artificial intelligence.